We are writing software for more than half of a century. And during this time we learnt really well that dealing with large problems at once is not productive. Things go wrong, plans do not hold, maintenance turns into a nightmare. We’ve got to break large tasks into a few smaller parts, relations among which are overseeable, and to keep on breaking these parts down until implementation becomes clear.

This approach makes sense and we apply it everywhere. And it does work really well… well, nearly everywhere. How do we manage source code? We call every logically complete unit a project, which can be released, delivered and used independently from others. Therefore we create a separate source repository for it and version it there. Yes, of course, not all projects are trivial and therefore they may consist of a few modules, but all of them are managed within one repository. In most of the cases, our project needs external libraries too. So we let build tools to resolve external dependencies from artifact repositories.

What does this approach give us?

- Clean boundaries among projects

- Easier reusable in other contexts code

- Fine-tuned access control to the sources

- Small, fast builds and tests

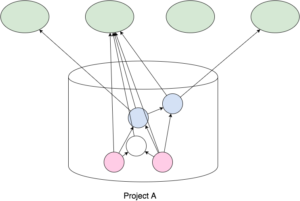

On the image above we see Project A with two applications to deliver, which use three other modules. All these modules depend on a few external libraries. Modules within the project depend on very latest versions of each other. If the project uses Maven, version of the dependency is often expressed as ${project.version} – use whatever you have right now.

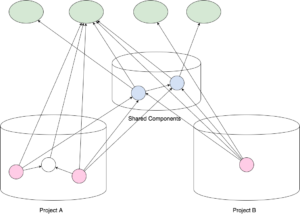

Let’s assume the two applications in our project are iOS and Mac weather apps and we decided to version their code within one repository. And now we are adding Android app, which shares some logic with the first applications. Since its release cycle won’t be matching the cycle of Project A, we create a separate repository for it and move shared components to a separate repository too.

Everything is still very clear and logical. Every project has its own purpose. Changes in Shared Components do not affect delivery projects until they decide to start using the changed code. We still keep things separate and we can focus at one thing at the time.

However, what did we have to do before creating repository for Project B?

- Create Shared Components repository

- Copy code of components from Project A to Shared (with a drop of their test coverage)

- Release Shared Components

- Delete components from Project A

- Add external dependencies to Project A

Before continuing I’d like to ask you to answer a few questions. Quickly, just for your self.

- Did you ever add a unit test in shared library to remove IDE complain about unused public method?

- Did you ever extend a class from shared library in order to overwrite a method and to work-around a bug?

- When do you think about updating versions of external dependencies of your project?

- What is Semantic Versioning and what is it used for?

- What are GIT Submodules and Subtrees for?

- What is @deprecated annotation for?

I think by now you already suspect where I’m going to. The tendency to break down source code into multiple repositories brings serious challenges. Let’s me try to group them.

Cross-project code changes

- Just because scope of every project is kept small, refactoring of the shared code very quickly turns into an incompatible code change

- Long chains of releases due to chains of dependencies

What happens if Project A has a problem due to a bug in Shared Components? We need to release Shared Components with a fix, change Project A to start using the fixed version and then release Project A. This might sound doable in our example, however length of Spring Boot dependencies chain (only within Spring Projects) is 8. And this is short because all 79 modules of Spring Boot are in a single project and repo - Temptation to just pick a version and “stabilize” (meaning, stagnate) – refusal to use latest version of shared libraries delays discovering bugs

- Growth of obsolete and deprecated code

Authors of the shared libraries very quickly lose track of their users. Just to keep compatibility obsolete and deprecated code is hardly ever removed - Loss of atomicity of large cross-modules commits

If somebody dares to work across the projects, the committed changes are not atomic

Dependencies conflicts

- External dependency versions of multiple repos are more likely to conflict. In our example both Project A and Shared Component depend of the same external library, but it becomes difficult to keep direct and transitive dependency on the same level. If not, the potential problem won’t be noticed at compilation, but at runtime

- Diamond dependency is a more complicated case of the same problem

- These problems become even more interesting because dependencies are set among modules, but we version and release projects. For example, 24 modules of OAuth for Spring Security depend on modules of Spring Boot. However at the same time 2 modules of Spring Boot depend on OAuth for Spring Security. What does it mean? If an incompatible change is introduced in one of the two, there is a risk of running into a problem at runtime… until both are released again

In larger projects figuring out compatible versions of internal and external dependencies becomes somebody’s full-time job.

Re-use and sharing of the code

- Multiple repositories and projects create multiple development environments with possibly different requirements. This is an additional obstacle for developers to start working with extra projects

- Not fixing bugs in “not my code”

Did you notice a bug is shared code? Will you fix it, submit a change and wait for new release? or will you simply work around the bug in your code?

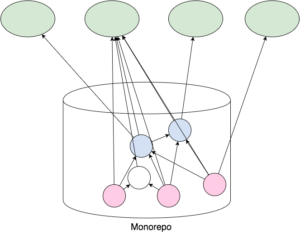

Most of OSS projects, which limit their scope, can safely ignore these problems. However their scale grows together with growth of the company source base. Therefore companies like Google, Facebook, Twitter, Digital Ocean, Salesforce and Etsy (there must be more) utilize monolithic repository approach. How is it different? Let’s draw our three applications in monorepo.

Now all three applications use the very latest version of shared components and this resolves the problems above.

- Developers can work with the entire source base and IDE will help them with refactoring

- Noticed a bug is shared code? Fix it and release your application. There is no need for releases in-between

- Changed shared code is used right away by all applications – immediate feedback on changes

- Obsolete and deprecated code can be removed – your IDE and tests know its users

- All hassle with internal dependencies is gone – use the latest, which is the same for all modules

- Code analyzers start seeing all the code – no copy/pasting across projects

- There are still external dependencies, but things like <dependencyManagement> help to keep them aligned within one project

- Developers have to create only one development environment… and its increased complexity might make you thinking about unifying requirements of the applications

By no means, monolithic repositories imply creation of large monolithic applications. Monolithic repositories is an approach for handling the code, for making it visible and reusable. All practices for packaging applications and deploying them remain. It’s perfectly possible to deliver microservices from monolithic repositories as well as, unfortunately, it’s possible to compose monolithic giants from hundreds of small repositories.

Yes, monolithic repositories approach has its own problems too

- Popular VCS do not allow fine-tuning of authorization to change code in directories

- When code base grows even further, they stop managing the volume well

- They do not enable selective checkout and this might overload IDE

- Builds become heavier and slower

However these are implementation problems, problems of the development tools we’ve developed to work with small repositories. Should we hooray git in all situations? Should we run “clean” build all the time? Large companies have been creating their own tooling and it gradually becomes available. Here are a few examples

- Bazel is Google’s own build tool, now publicly available in Beta

- Buck is a build system developed and used by Facebook

- Pants is a collaborative open-source project, built and used by Twitter, Foursquare, Square, Medium

I’m not claiming Monolithic Repository being a panacea for all software development problems. However if your company grows, has open and collaborative culture and health of your code base matters, it is a very viable approach for handling the code.

References

- Why Google Stores Billions of Lines of Code in a Single Repository

- Advantages of monolithic version control

- Why We Should Use Monolithic Repositories and Why We Should Not Return to Monolithic Repositories

- Monorepos For True CI

- Taming Your Go Dependencies